Introduction

Web scraping is a mechanism that involves extracting data such as texts, images, videos, etc from a website for various purposes. Some of the tools used for web scraping are Zenrows, Puppeteer, etc. These tools are sometimes called Web Scrapers or Web Scraping Tools.

Data can be extracted for various reasons such as data analysis, building APIs, testing, automation, etc.

In this article, you will get a practical guide on how to scrape YouTube and extract YouTubers' details using Puppeteer, after which you will create a new JSON file and store the extracted details. Scraping data can be sensitive and can lead to legal issues when done wrong but since YouTube profile information are public, you should be fine.

How a Web Scraping Tool works

A web scraper (or web scraping tool) works in the following steps:

It makes an HTTP request to the website using its URL,

It parses the returned web page and extracts data according to code implementation,

It then saves or processes extracted data.

Steps to Scraping a Website

To scrape a website, here are the common steps you should take:

Choice of the website: First you need to determine the website you want to scrape and what data you want to extract. Once you are certain, get the website's URL as this will be used for sending HTTP requests.

Examine the page(s): Before you can scrape data from a website, you need to have an understanding of what the page structure looks like and how the elements of the page are identified (e.g using IDs or classes).

Implementation: In this step, you have to perform the actual action, i.e data extraction, by writing the code using your chosen web scraping tool

Store or process data: After you have extracted the data you need, you can decide what you want to do with it. You can store it locally in a format you prefer e.g JSON, CSV, or Excel format.

Prerequisites

To follow along properly, you have to be familiar with the following technologies:

JavaScript

Basic usage of Puppeteer

Basic usage of Nodejs

Project Installation

Create a new folder and name it Web-Scraping

Create an index.js file. This is where your code should go.

Open your terminal and initialize the project as a Node.js project by running the following command:

npm init

This creates the package.json file where your dependencies (in this case Puppeteer) will be stored.

For this project, you need to install Nodejs and Puppeteer. To install Puppeteer, run the following command:

npm i puppeteer

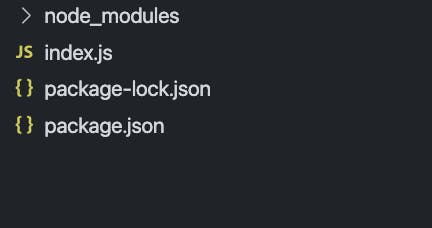

This will install the necessary packages required. Your folder structure should look like this:

Code Implementation

Open your index.js file and paste the following code:

const puppeteer = require('puppeteer');

var fs = require('fs');

const url = 'https://youtube.com';

// function to get YouTuber's data

async function getUserData (user) {

// {headless: true} tells puppeteer to run your browser under the

// hood, i.e you don't see the browser

const browser = await puppeteer.launch({ headless: true });

const page = await browser.newPage();

await page.goto(`${url}/@${user}`);

const data = await page.evaluate(() => {

const avatar = document.getElementById('img').src;

const channelName = document.getElementById('channel-name').innerText;

const channelHandle = document.getElementById('channel-handle').innerText;

const totalSubscribers = document.getElementById('subscriber-count').innerText;

const totalVideos = document.getElementById('videos-count').innerText;

return { avatar, channelName, channelHandle, totalSubscribers, totalVideos };

});

await browser.close();

return data;

}

// YouTubers profiles that we want to extract

const users = ['mkbhd', 'mrbeast', 'apple', 'google', 'microsoft'];

// create a new JSON file and store users profile data from youtube

const usersData = users.map(async (user) => {

const res = await getUserData(user);

return res;

});

Promise.all(usersData).then(data => {

fs.writeFile('profileData.json', JSON.stringify(data), 'utf8', (err) => {

if (err) console.log('Failed to create file!');

return err;

});

console.log(data);

});

This code generally uses Puppeteer to navigate to the specified URL of a particular set of users (in this case mkbhd, mrbeast, apple, google, and microsoft) and retrieves data about their avatar, channel name, channel handle, total subscribers, and total videos. Afterward, it creates a new file profileData.json and stores the retrieved data in it.

Explanation

The first line and second line enables us to use Puppeteer and Node File System module for this project. Since Puppeteer mostly returns a promise, we use an async function.

The async function getUserData accepts a username for which it will retrieve necessary data and returns the extracted data after it is done.

The puppeteer.launch() method launches your browser, opens a new page using the newPage() method, and then navigates to the set URL (which is a conjunction of the URL and the username) using the goto() method. With the evaluate() method, Puppeteer analysis the page structure and allows you to use regular JavaScript methods like document.getElementById() to access and extract content from the webpage. After data is extracted, the close() method closes the browser and the operation is completed.

Now that this function is set, it can be reused to extract data for different users. After data is extracted, the fs.writeFile module from Node File System is then used to create a profileData.json file and store the data in JSON format. This file can then be used as you please e.g it can be used for building API or converted to a CSV file for sheet processing.

Here's what the extracted files should look like:

[

{

"avatar": "https://yt3.googleusercontent.com/lkH37D712tiyphnu0Id0D5MwwQ7IRuwgQLVD05iMXlDWO-kDHut3uI4MgIEAQ9StK0qOST7fiA=s176-c-k-c0x00ffffff-no-rj",

"channelName": "Marques Brownlee ",

"channelHandle": "@mkbhd",

"totalSubscribers": "16.7M subscribers",

"totalVideos": "1.5K videos"

},

{

"avatar": "https://yt3.googleusercontent.com/ytc/AL5GRJVuqw82ERvHzsmBxL7avr1dpBtsVIXcEzBPZaloFg=s88-c-k-c0x00ffffff-no-rj",

"channelName": "MrBeast ",

"channelHandle": "@MrBeast",

"totalSubscribers": "138M subscribers",

"totalVideos": "736 videos"

},

{

"avatar": "https://yt3.googleusercontent.com/ytc/AL5GRJVaP8qqhnaBvlgkQDRWDONrWTbpYSMOv7hwHI235w=s88-c-k-c0x00ffffff-no-rj",

"channelName": "Apple ",

"channelHandle": "@Apple",

"totalSubscribers": "16.9M subscribers",

"totalVideos": "192 videos"

},

{

"avatar": "https://yt3.googleusercontent.com/ytc/AL5GRJUNolsa3j03QZxx3egj_yEP6gNK4lzbFRCUgdoi6SM=s176-c-k-c0x00ffffff-no-rj",

"channelName": "Google ",

"channelHandle": "@Google",

"totalSubscribers": "10.9M subscribers",

"totalVideos": "2.7K videos"

},

{

"avatar": "https://yt3.googleusercontent.com/ytc/AL5GRJV2HsULQGRbK5DnR0b7Gv2YNLsY78LwOZQdmYsZ=s88-c-k-c0x00ffffff-no-rj",

"channelName": "Microsoft ",

"channelHandle": "@Microsoft",

"totalSubscribers": "937K subscribers",

"totalVideos": "931 videos"

}

]

Conclusion

Thanks for reading this far! At this point, you've learned about Web Scraping, what it is, and why it is beneficial. You have also learned how a website such as YouTube can be scraped using Puppeteer.

Like Puppeteer, Zenrows helps you to scrape websites, including those with Captchas which makes it tough to scrape. It supports multiple languages and provides you with a built-in anti-bot to make Web Scraping easier.